brainpy.math.surrogate.relu_grad#

- brainpy.math.surrogate.relu_grad(x, alpha=0.3, width=1.0)[source]#

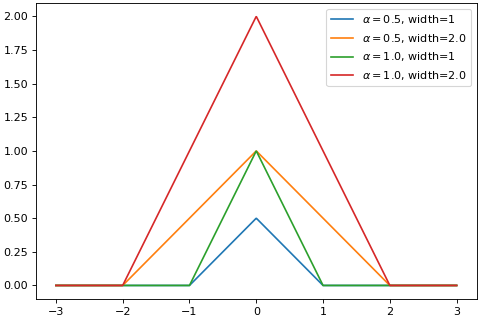

Spike function with the ReLU gradient function [1].

The forward function:

\[\begin{split}g(x) = \begin{cases} 1, & x \geq 0 \\ 0, & x < 0 \\ \end{cases}\end{split}\]Backward function:

\[g'(x) = \text{ReLU}(\alpha * (\mathrm{width}-|x|))\]>>> import brainpy as bp >>> import brainpy.math as bm >>> import matplotlib.pyplot as plt >>> xs = bm.linspace(-3, 3, 1000) >>> bp.visualize.get_figure(1, 1, 4, 6) >>> for s in [0.5, 1.]: >>> for w in [1, 2.]: >>> grads = bm.vector_grad(bm.surrogate.relu_grad)(xs, s, w) >>> plt.plot(bm.as_numpy(xs), bm.as_numpy(grads), label=r'$\alpha=$' + f'{s}, width={w}') >>> plt.legend() >>> plt.show()

(

Source code,png,hires.png,pdf)

- Parameters:

- Returns:

out – The spiking state.

- Return type:

jax.Array

References