Concept 2: Dynamical System#

BrainPy supports modelings in brain simulation and brain-inspired computing.

All these supports are based on one common concept: Dynamical System via brainpy.DynamicalSystem.

Therefore, it is essential to understand:

what is

brainpy.DynamicalSystem?how to define

brainpy.DynamicalSystem?how to run

brainpy.DynamicalSystem?

import brainpy as bp

import brainpy.math as bm

bm.set_platform('cpu')

bp.__version__

'2.4.3.post3'

What is DynamicalSystem?#

All models used in brain simulation and brain-inspired computing is DynamicalSystem.

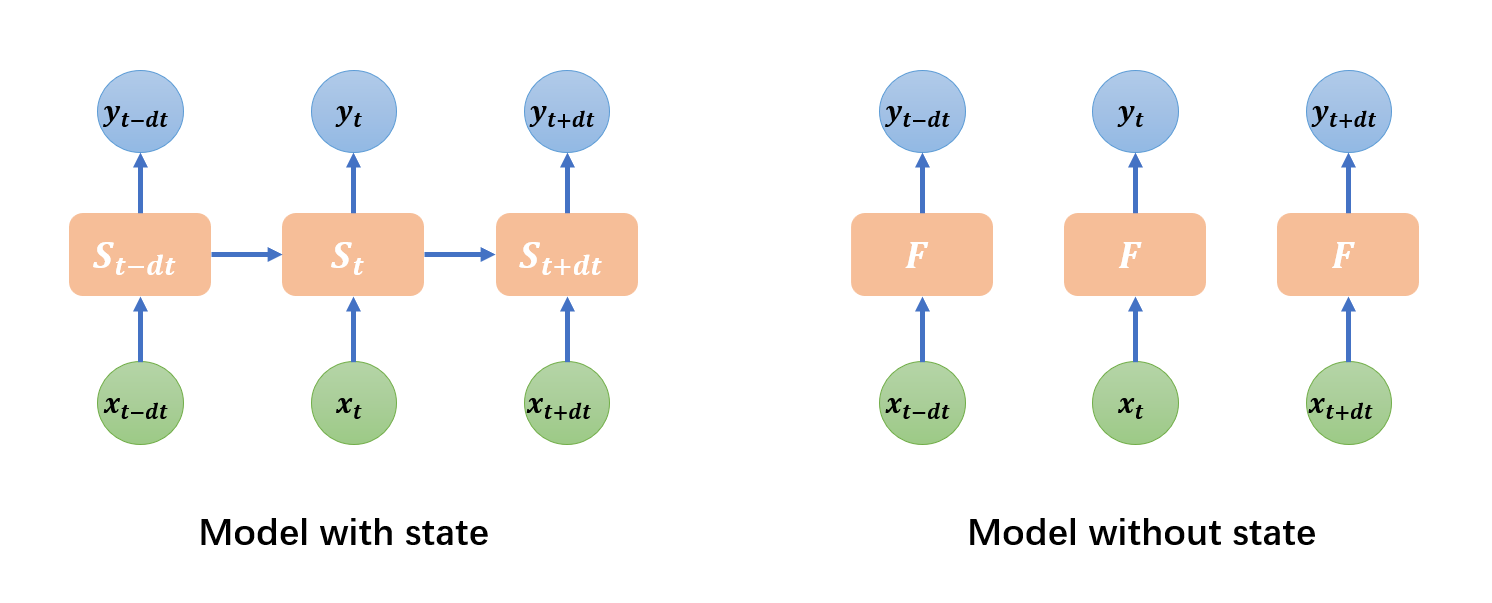

A DynamicalSystem defines the updating rule of the model at single time step.

For models with state,

DynamicalSystemdefines the state transition from \(t\) to \(t+dt\), i.e., \(S(t+dt) = F\left(S(t), x, t, dt\right)\), where \(S\) is the state, \(x\) is input, \(t\) is the time, and \(dt\) is the time step. This is the case for recurrent neural networks (like GRU, LSTM), neuron models (like HH, LIF), or synapse models which are widely used in brain simulation.However, for models in deep learning, like convolution and fully-connected linear layers,

DynamicalSystemdefines the input-to-output mapping, i.e., \(y=F\left(x, t\right)\).

How to define DynamicalSystem?#

Keep in mind that the usage of DynamicalSystem has several constraints in BrainPy.

1. .update() function#

First, all DynamicalSystem should implement .update() function, which receives two arguments:

class YourModel(bp.DynamicalSystem):

def update(self, s, x):

pass

s(or named as others): A dict, to indicate shared arguments across all nodes/layers in the network, likethe current time

t, orthe current running index

i, orthe current time step

dt, orthe current phase of training or testing

fit=True/False.

x(or named as others): The individual input for this node/layer.

We call s as shared arguments because they are same and shared for all nodes/layers. On the contrary, different nodes/layers have different input x.

Here, it is necessary to explain the usage of bp.share.

bp.share.save( ): The function saves shared arguments in the global context. User can save shared arguments in two ways, for example, if user want to set the current timet=100, the current time stepdt=0.1,the user can usebp.share.save("t",100,"dt",0.1)orbp.share.save(t=100,dt=0.1).bp.share.load( ): The function gets the shared data by thekey, for example,bp.share.load("t").bp.share.clear_shargs( ): The function clears the specific shared arguments in the global context, for example,bp.share.clear_shargs("t").bp.share.clear( ): The function clears all shared arguments in the global context.

Example: LIF neuron model for brain simulation

Here we illustrate the first constraint of DynamicalSystem using the Leaky Integrate-and-Fire (LIF) model.

The LIF model is firstly proposed in brain simulation for modeling neuron dynamics. Its equation is given by

For the details of the model, users should refer to Wikipedia or other resource.

class LIF_for_BrainSimulation(bp.DynamicalSystem):

def __init__(self, size, V_rest=0., V_th=1., tau=5., mode=None):

super().__init__(mode=mode)

# this model only supports non-batching mode

bp.check.is_subclass(self.mode, bm.NonBatchingMode)

# parameters

self.size = size

self.V_rest = V_rest

self.V_th = V_th

self.tau = tau

# variables

self.V = bm.Variable(bm.ones(size) * V_rest)

self.spike = bm.Variable(bm.zeros(size, dtype=bool))

# integrate differential equation with exponential euler method

self.integral = bp.odeint(f=lambda V, t, I: (-V + V_rest + I)/tau, method='exp_auto')

def update(self, x):

# define how the model states update

# according to the external input

t = bp.share.load('t')

dt = bp.share.load('dt')

V = self.integral(self.V, t, x, dt=dt)

spike = V >= self.V_th

self.V.value = bm.where(spike, self.V_rest, V)

self.spike.value = spike

return spike

2. Computing mode#

Second, explicitly consider which computing mode your DynamicalSystem supports.

Brain simulation usually builds models without batching dimension (we refer to it as non-batching mode, as seen in above LIF model), while brain-inspired computation trains models with a batch of data (batching mode or training mode).

So, to write a model applicable to abroad applications in brain simulation and brain-inspired computing, you need to consider which mode your model supports, one of them, or both of them.

Example: LIF neuron model for both brain simulation and brain-inspired computing

When considering the computing mode, we can program a general LIF model for brain simulation and brain-inspired computing.

To overcome the non-differential property of the spike in the LIF model for brain simulation, i.e., at the code of

spike = V >= self.V_th

LIF models used in brain-inspired computing calculate the spiking state using the surrogate gradient function. Usually, we replace the backward gradient of the spike with a smooth function, like

class LIF(bp.DynamicalSystem):

supported_modes = (bm.NonBatchingMode, bm.BatchingMode, bm.TrainingMode)

def __init__(self, size, f_surrogate=None, V_rest=0., V_th=1., tau=5., mode=None):

super().__init__(mode=mode)

# Parameters

self.size = size

self.num = bp.tools.size2num(size)

self.V_rest = V_rest

self.V_th = V_th

self.tau = tau

if f_surrogate is None:

f_surrogate = bm.surrogate.inv_square_grad

self.f_surrogate = f_surrogate

# integrate differential equation with exponential euler method

self.integral = bp.odeint(f=lambda V, t, I: (-V + V_rest + I) / tau, method='exp_auto')

# Initialize a Variable:

# - if non-batching mode, batch axis of V is None

# - if batching mode, batch axis of V is 0

self.V = bp.init.variable_(bm.zeros, self.size, self.mode)

self.V[:] = self.V_rest

self.spike = bp.init.variable_(bm.zeros, self.size, self.mode)

def reset_state(self, batch_size=None):

self.V.value = bp.init.variable_(bm.ones, self.size, batch_size) * self.V_rest

self.spike.value = bp.init.variable_(bm.zeros, self.size, batch_size)

def update(self, x):

t = bp.share.load('t')

dt = bp.share.load('dt')

V = self.integral(self.V, t, x, dt=dt)

# replace non-differential heaviside function

# with a surrogate gradient function

spike = self.f_surrogate(V - self.V_th)

# reset membrane potential

self.V.value = (1. - spike) * V + spike * self.V_rest

self.spike.value = spike

return spike

Model composition#

The LIF model we have defined above can be recursively composed to construct networks in brain simulation and brain-inspired computing.

The following code snippet utilizes the LIF model to build an E/I balanced network EINet, which is a classical network model in brain simulation.

class Exponential(bp.Projection):

def __init__(self, num_pre, post, prob, g_max, tau):

super(Exponential, self).__init__()

self.proj = bp.dyn.ProjAlignPostMg1(

comm=bp.dnn.EventCSRLinear(bp.conn.FixedProb(prob, pre=num_pre, post=post.num), g_max),

syn=bp.dyn.Expon.desc(post.num, tau=tau),

out=bp.dyn.CUBA.desc(),

post=post

)

def update(self, x):

return self.proj(x)

class EINet(bp.DynamicalSystem):

def __init__(self, num_exc, num_inh):

super().__init__()

self.E = LIF(num_exc, V_rest=-55, V_th=-50., tau=20.)

self.I = LIF(num_inh, V_rest=-55, V_th=-50., tau=20.)

self.E2E = Exponential(prob=0.02, num_pre=num_exc, post=self.E, g_max=1.62, tau=5.)

self.E2I = Exponential(prob=0.02, num_pre=num_exc, post=self.I, g_max=1.62, tau=5.)

self.I2E = Exponential(prob=0.02, num_pre=num_inh, post=self.E, g_max=-9.0, tau=10.)

self.I2I = Exponential(prob=0.02, num_pre=num_inh, post=self.I, g_max=-9.0, tau=10.)

def update(self, x):

# x is the background input

e2e = self.E2E(self.E.spike)

e2i = self.E2I(self.E.spike)

i2e = self.I2E(self.I.spike)

i2i = self.I2I(self.I.spike)

self.E(e2e + i2e + x)

self.I(e2i + i2i + x)

with bm.environment(mode=bm.nonbatching_mode):

net1 = EINet(3200, 800)

Moreover, our LIF model can also be used in brain-inspired computing scenario. The following AINet uses the LIF model to construct a model for AI training.

# This network can be used in AI applications

class AINet(bp.DynamicalSystem):

def __init__(self, sizes):

super().__init__()

self.neu1 = LIF(sizes[0])

self.syn1 = bp.dnn.Dense(sizes[0], sizes[1])

self.neu2 = LIF(sizes[1])

self.syn2 = bp.dnn.Dense(sizes[1], sizes[2])

self.neu3 = LIF(sizes[2])

def update(self, x):

return x >> self.neu1 >> self.syn1 >> self.neu2 >> self.syn2 >> self.neu3

with bm.environment(mode=bm.training_mode):

net2 = AINet([100, 50, 10])

How to run DynamicalSystem?#

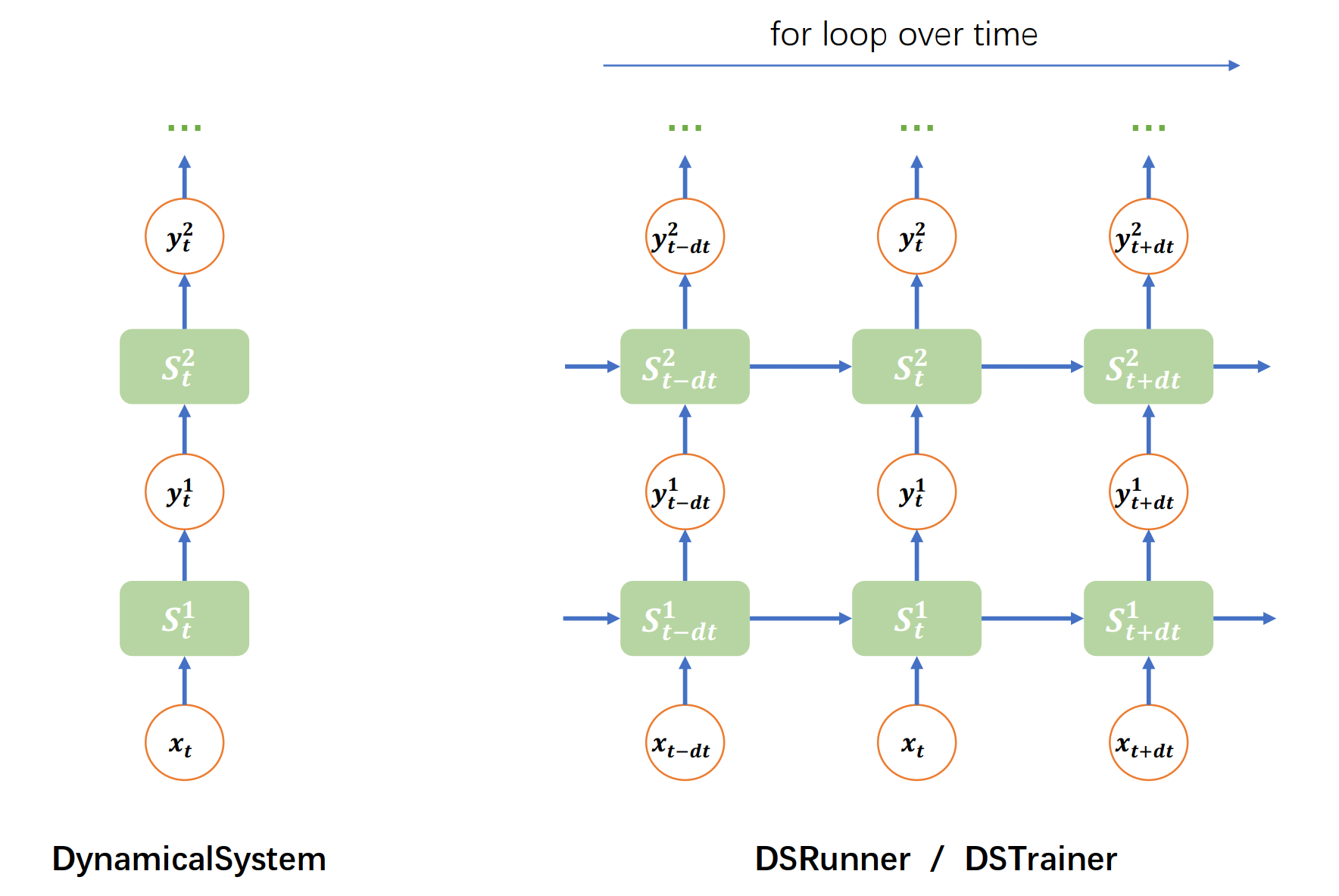

As we have stated above that DynamicalSystem only defines the updating rule at single time step, to run a DynamicalSystem instance over time, we need a for loop mechanism.

1. brainpy.math.for_loop#

for_loop is a structural control flow API which runs a function with the looping over the inputs. Moreover, this API just-in-time compile the looping process into the machine code.

Suppose we have 200 time steps with the step size of 0.1, we can run the model with:

def run_net2(t, currents):

bp.share.save(t=t)

return net2(currents)

with bm.environment(dt=0.1):

# construct the given time steps

times = bm.arange(200) * bm.dt

# construct the inputs with shape of (time, batch, feature)

currents = bm.random.rand(200, 10, 100)

# run the model

net2.reset(10)

out = bm.for_loop(run_net2, (times, currents))

out.shape

(200, 10, 10)

2. brainpy.LoopOverTime#

Different from for_loop, brainpy.LoopOverTime is used for constructing a dynamical system that automatically loops the model over time when receiving an input.

for_loop runs the model over time. While brainpy.LoopOverTime creates a model which will run the model over time when calling it.

net2.reset(10)

looper = bp.LoopOverTime(net2)

out = looper(currents)

out.shape

(200, 10, 10)

3. brainpy.DSRunner#

Another way to run the model in BrainPy is using the structural running object DSRunner and DSTrainer. They provide more flexible way to monitoring the variables in a DynamicalSystem. The details users should refer to the DSRunner tutorial.

with bm.environment(dt=0.1):

runner = bp.DSRunner(net1, monitors={'E.spike': net1.E.spike, 'I.spike': net1.I.spike})

runner.run(inputs=bm.ones(1000) * 20.)

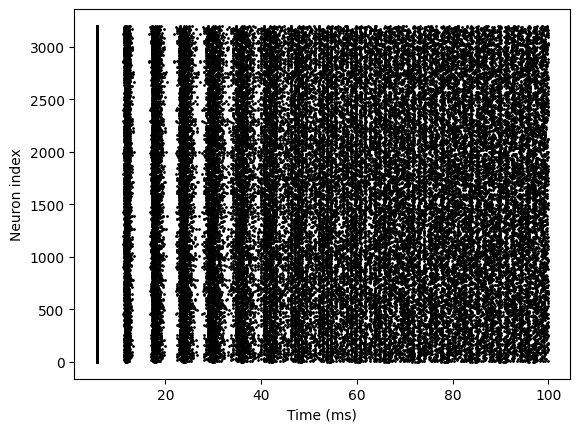

bp.visualize.raster_plot(runner.mon['ts'], runner.mon['E.spike'])