Simulating a Brain Dynamics Model#

One of the most important approaches of studying brain dynamics is building a dynamic model and doing simulation. Generally, there are two ways to construct a dynamic model. The first one is called spiking models, which attempt to finely simulate the activity of each neuron in the target population. They are named spiking models because the simulation process records the precise timing of spiking of every neuron. The second is called rate models, which regard a population of neurons with similar properties as a single firing unit and examine the firing rate of this population. In this section, we will illustrate how to build and simulate a spiking neural network, e.i. SNN.

To begin with, the BrainPy package should be imported:

import numpy as np

import brainpy as bp

import brainpy.math as bm

bm.set_platform('cpu')

bp.__version__

'2.4.4.post3'

Simulating an E-I balanced network#

Building an E-I balanced network#

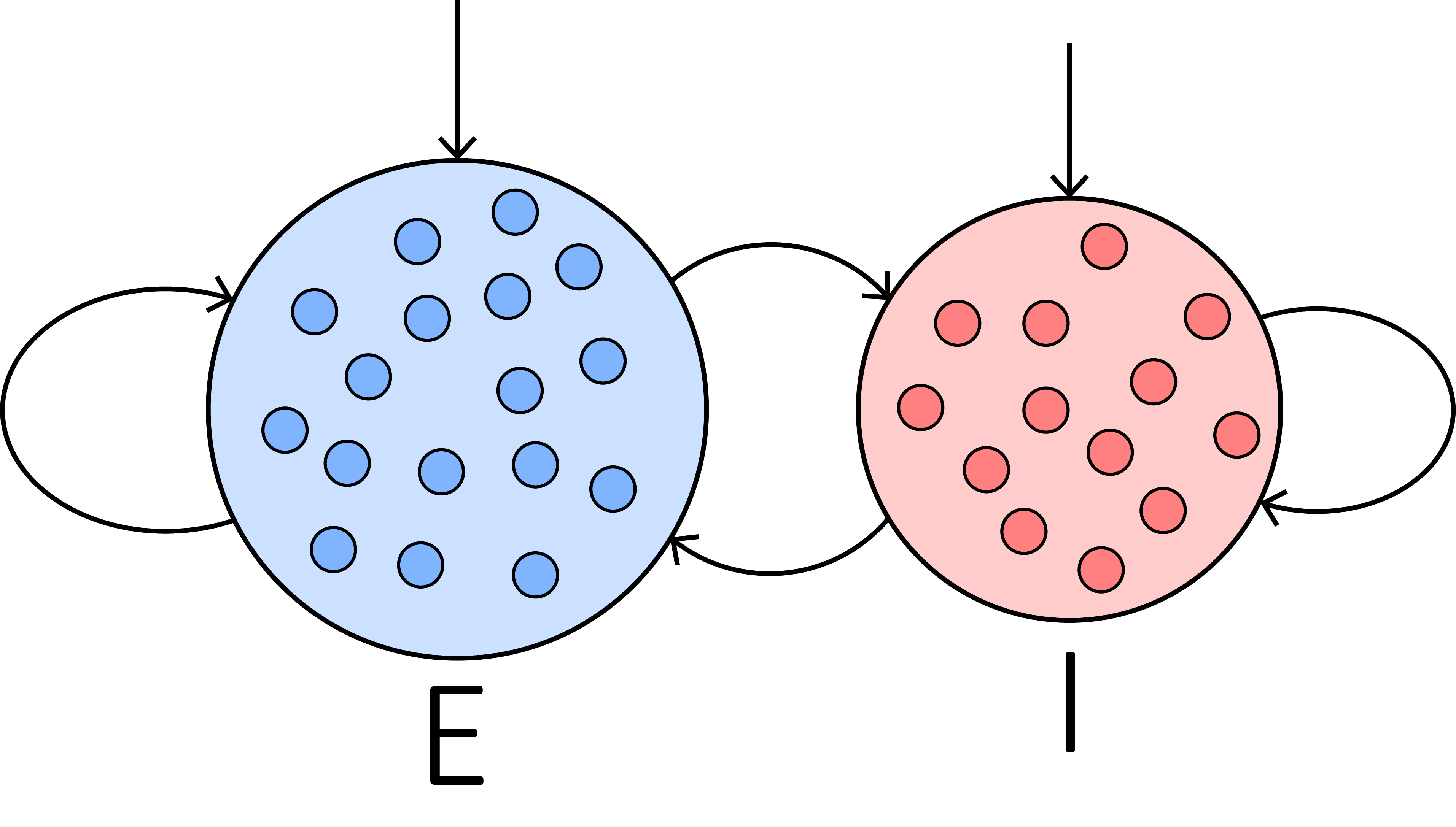

Firstly, let’s try to build an E-I balanced network. It was proposed to interpret the irregular firing of neurons in the cortical area [1]. Since the structure of an E-I balanced network is relatively simple, it is a good practice that helps users to learn the basic paradigm of brain dynamic simulation in BrainPy. The structure of a E-I balanced network is as follows:

An E-I balanced network is composed of two neuron groups and the synaptic connections between them. Specifically, they include:

a group of excitatory neurons, \(\mathrm{E}\),

a group of inhibitory neurons, \(\mathrm{I}\),

synaptic connections within the excitatory and inhibitory neuron groups, respectively, and

the inter-connections between these two groups.

To construct the network, we need to define these components one by one. BrainPy provides plenty of handy built-in models for brain dynamic simulation. They are contained in brainpy.dyn. Let’s choose the simplest yet the most canonical neuron model, the Leaky Integrate-and-Fire (LIF) model, to build the excitatory and inhibitory neuron groups:

E = bp.dyn.LifRef(3200, V_rest=-60., V_th=-50., V_reset=-60.,

tau=20., tau_ref=5., method='exp_auto',

V_initializer=bp.init.Normal(-60., 2.))

I = bp.dyn.LifRef(800, V_rest=-60., V_th=-50., V_reset=-60.,

tau=20., tau_ref=5., method='exp_auto',

V_initializer=bp.init.Normal(-60., 2.))

When defining the LIF neuron group, the parameters can be tuned according to users’ need. The first parameter denotes the number of neurons. Here the ratio of excitatory and inhibitory neurons is set to 4:1. V_rest denotes the resting potential, V_th denotes the firing threshold, V_reset denotes the reset value after firing, tau is the time constant, and tau_ref is the duration of the refractory period. method refers to the numerical integration method to be used in simulation.

Before we define the synaptic projections between different populations, let’s create a synapse model with the Exponential dynamics and conductance-based synaptic currents.

class Exponential(bp.Projection):

def __init__(self, pre, post, delay, prob, g_max, tau, E):

super().__init__()

self.pron = bp.dyn.ProjAlignPost2(

pre=pre,

delay=delay,

# Event-driven computation

comm=bp.dnn.EventCSRLinear(bp.conn.FixedProb(prob, pre=pre.num, post=post.num), g_max),

syn=bp.dyn.Expon(size=post.num, tau=tau),# Exponential synapse

out=bp.dyn.COBA(E=E), # COBA network

post=post

)

Here we use the Align post projection method (bp.dyn.ProjAlignPost2) to simulate synaptic connections. Among the parameters of the model, the first two denotes the pre- and post-synaptic neuron groups, respectively. The third one refers to the connection types. In this example, we use bp.conn.FixedProb, which connects the pre-synaptic neurons to postsynaptic neurons with a given probability (detailed information is available in Synaptic Connection). The following three parameters describes the dynamic properties of the synapse, and the last one is the numerical integration method as that in the LIF model.

Then the synaptic connections between these two groups can be defined as follows:

# projection from E to E

E2E = Exponential(E, E, 0., 0.02, 0.6, 5., 0.)

# projection from E to I

E2I = Exponential(E, I, 0., 0.02, 0.6, 5., 0.)

# projection from I to E

I2E = Exponential(I, E, 0., 0.02, 6.7, 10., -80.)

# projection from I to I

I2I = Exponential(I, I, 0., 0.02, 6.7, 10., -80.)

Putting these together, we can get an E/I balanced network.

class EINet(bp.DynamicalSystem):

def __init__(self, ne=3200, ni=800):

super().__init__()

self.E = bp.dyn.LifRef(ne, V_rest=-60., V_th=-50., V_reset=-60., tau=20., tau_ref=5.,

V_initializer=bp.init.Normal(-55., 2.))

self.I = bp.dyn.LifRef(ni, V_rest=-60., V_th=-50., V_reset=-60., tau=20., tau_ref=5.,

V_initializer=bp.init.Normal(-55., 2.))

self.E2E = Exponential(self.E, self.E, 0., 0.02, 0.6, 5., 0.)

self.E2I = Exponential(self.E, self.I, 0., 0.02, 0.6, 5., 0.)

self.I2E = Exponential(self.I, self.E, 0., 0.02, 6.7, 10., -80.)

self.I2I = Exponential(self.I, self.I, 0., 0.02, 6.7, 10., -80.)

def update(self, inp=0.):

self.E2E()

self.E2I()

self.I2E()

self.I2I()

self.E(inp)

self.I(inp)

# monitor

return self.E.spike, self.I.spike

We have successfully constructed an E-I balanced network by using BrainPy’s biult-in models. On the other hand, BrianPy also enables users to customize their own dynamic models such as neuron groups, synapses, and networks flexibly. In fact, brainpy.DynSysGroup() is a simple example of customizing a network model. Please refer to Dynamic Simulation for more information.

Running a simulation#

After building a SNN, we can use it for dynamic simulation. BrainPy provides multiple ways to simulate brain dynamics models.

First, BrainPy provides DSRunner in brainpy, which will be expanded in the Runners tutorial. Users can initialize DSRunner as followed:

net = EINet()

runner = bp.DSRunner(net, monitors=['E.spike', 'I.spike'])

To make dynamic simulation more applicable and powerful, users can monitor variable trajectories and give inputs to target neuron groups. Here we monitor the spike variable in the E and I LIF model, which refers to the spking status of the neuron group. More details of how to give inputs and monitors please refer to Dynamic Simulation.

After creating the runner, we can run a simulation by calling the runner, where the calling function receives the simulation time (usually in milliseconds) as the input. BrainPy achieves an extraordinary simulation speed with the assistance of just-in-time (JIT) compilation. Please refer to Just-In-Time Compilation for more details.

Is = bm.ones(1000) * 20. # 100 ms

_ = runner.run(inputs=Is)

The monitored spikes are stored in the runner.mon.

E_sps = runner.mon['E.spike']

I_sps = runner.mon['I.spike']

Second, users can also use brainpy.math.for_loop for the efficient simulation of any BrainPy models. To do that, we need to define a running function which defines the one-step updating function of the model.

net = EINet()

def run_fun(i):

# i: the running index

# 20.: the input

return net.step_run(i, 20.)

indices = np.arange(int(100. / bm.get_dt())) # 100. ms

E_sps, I_sps = bm.for_loop(run_fun, indices)

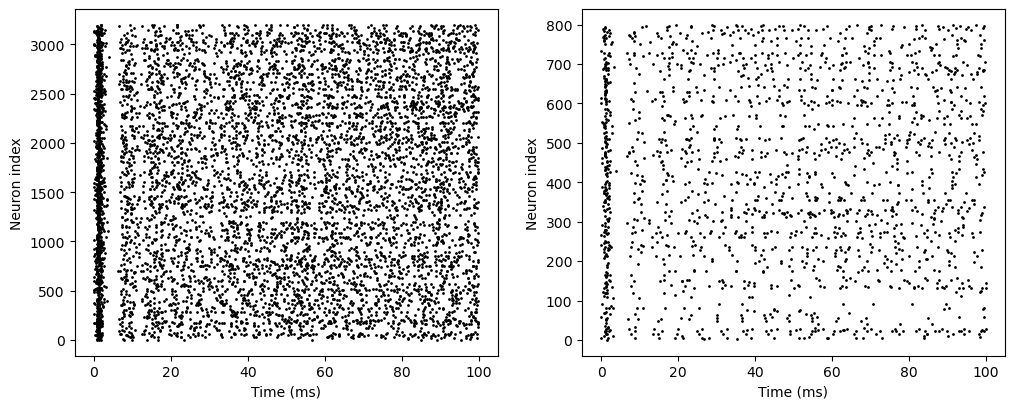

The simulation results are stored as NumPy arrays in the monitors, and can be visualized easily:

import matplotlib.pyplot as plt

plt.figure(figsize=(12, 4.5))

ts = indices * bm.get_dt()

plt.subplot(121)

bp.visualize.raster_plot(ts, E_sps, show=False)

plt.subplot(122)

bp.visualize.raster_plot(ts, I_sps, show=True)

In the code above, brianpy.visualize contains some useful functions to visualize simulation results based on the matplotlib package. Since the simulation results are stored as NumPy arrays, users can directly use matplotlib for visualization.

Simulating a decision-making network#

Building a decision-making network#

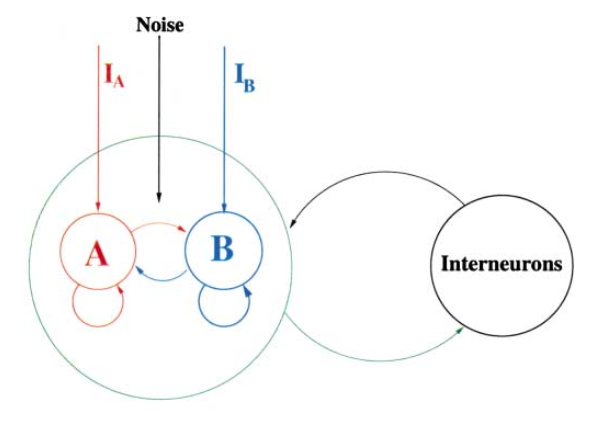

After learning how to build a E-I balanced network, we can try to handle a more complex model. In 2002, Wang proposed a decision-making model that could choose between two conflict inputs by accumulating evidence over time [2].

The structure of a decision-making network is as follows. Similar to the E-I balanced network, the decision-making network contains an excitatory and an inhibitory neuron group, forming connections within each group and between each other. What is different is that there are two specific subpopulation of neurons, A and B, that receive conflict inputs from outside (other brain areas). After given the external inputs, if the activity of A prevail over B, it means the network chooses option A, and vice versa.

To construct a decision-making network, we should build all neuron groups:

Two excitatory neuron groups with different selectivity, \(\mathrm{A}\) and \(\mathrm{B}\), and other excitatory neurons, \(\mathrm{N}\);

An inhibitory neuron group, \(\mathrm{I}\);

Neurons generating external inputs \(\mathrm{I_A}\) and \(\mathrm{I_B}\);

Neurons generating noise to all neuron groups, \(\mathrm{noise_A}\), \(\mathrm{noise_B}\), \(\mathrm{noise_N}\), and \(\mathrm{noise_I}\).

And the synapse connection between them:

Connection from excitatory neurons to others, \(\mathrm{A2A}\), \(\mathrm{A2B}\), \(\mathrm{A2N}\), \(\mathrm{A2I}\), \(\mathrm{B2A}\), \(\mathrm{B2B}\), \(\mathrm{B2N}\), \(\mathrm{B2I}\), and \(\mathrm{N2A}\), \(\mathrm{N2B}\), \(\mathrm{N2N}\), \(\mathrm{N2I}\);

Connection from inhibitory neurons to others, \(\mathrm{I2A}\), \(\mathrm{I2B}\), \(\mathrm{I2N}\), \(\mathrm{I2I}\);

Connection from external inputs to selective neuron groups, \(\mathrm{IA2A}\), \(\mathrm{IB2B}\);

Connection from noise neurons to excitatory and inhibitory neurons, \(\mathrm{noise2A}\), \(\mathrm{noise2B}\), \(\mathrm{noise2N}\), \(\mathrm{noise2I}\).

Now let’s build these neuron groups and connections.

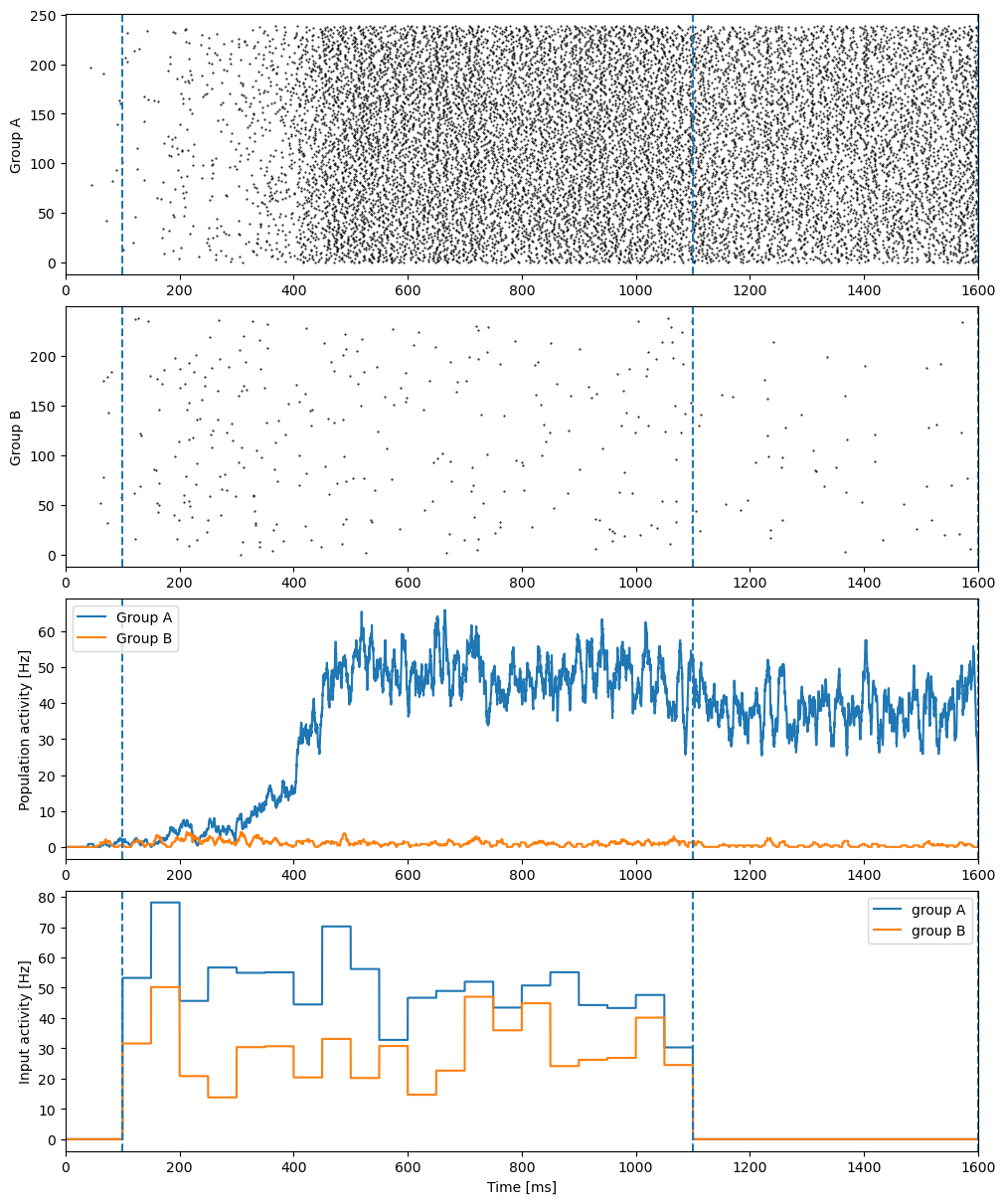

First of all, to imitate the biophysical experiments, we define three periods:

pre_stimulus_period = 100. # time before the external simuli are given

stimulus_period = 1000. # time within which the external simuli are given

delay_period = 500. # time after the external simuli are removed

total_period = pre_stimulus_period + stimulus_period + delay_period

We first define tools we used for simulation, including the visualization toolkit and Poisson noise generations.

class Tool:

def __init__(self, pre_stimulus_period=100., stimulus_period=1000., delay_period=500.):

self.pre_stimulus_period = pre_stimulus_period

self.stimulus_period = stimulus_period

self.delay_period = delay_period

self.freq_variance = 10.

self.freq_interval = 50.

self.total_period = pre_stimulus_period + stimulus_period + delay_period

def generate_freqs(self, mean):

# stimulus period

n_stim = int(self.stimulus_period / self.freq_interval)

n_interval = int(self.freq_interval / bm.get_dt())

freqs_stim = np.random.normal(mean, self.freq_variance, (n_stim, 1))

freqs_stim = np.tile(freqs_stim, (1, n_interval)).flatten()

# pre stimulus period

freqs_pre = np.zeros(int(self.pre_stimulus_period / bm.get_dt()))

# post stimulus period

freqs_delay = np.zeros(int(self.delay_period / bm.get_dt()))

all_freqs = np.concatenate([freqs_pre, freqs_stim, freqs_delay], axis=0)

return bm.asarray(all_freqs)

def visualize_results(self, mon, IA_freqs, IB_freqs, t_start=0., title=None):

fig, gs = bp.visualize.get_figure(4, 1, 3, 10)

axes = [fig.add_subplot(gs[i, 0]) for i in range(4)]

ax = axes[0]

bp.visualize.raster_plot(mon['ts'], mon['A.spike'], markersize=1, ax=ax)

if title: ax.set_title(title)

ax.set_ylabel("Group A")

ax.set_xlim(t_start, self.total_period + 1)

ax.axvline(self.pre_stimulus_period, linestyle='dashed')

ax.axvline(self.pre_stimulus_period + self.stimulus_period, linestyle='dashed')

ax.axvline(self.pre_stimulus_period + self.stimulus_period + self.delay_period, linestyle='dashed')

ax = axes[1]

bp.visualize.raster_plot(mon['ts'], mon['B.spike'], markersize=1, ax=ax)

ax.set_ylabel("Group B")

ax.set_xlim(t_start, self.total_period + 1)

ax.axvline(self.pre_stimulus_period, linestyle='dashed')

ax.axvline(self.pre_stimulus_period + self.stimulus_period, linestyle='dashed')

ax.axvline(self.pre_stimulus_period + self.stimulus_period + self.delay_period, linestyle='dashed')

ax = axes[2]

rateA = bp.measure.firing_rate(mon['A.spike'], width=10.)

rateB = bp.measure.firing_rate(mon['B.spike'], width=10.)

ax.plot(mon['ts'], rateA, label="Group A")

ax.plot(mon['ts'], rateB, label="Group B")

ax.set_ylabel('Population activity [Hz]')

ax.set_xlim(t_start, self.total_period + 1)

ax.axvline(self.pre_stimulus_period, linestyle='dashed')

ax.axvline(self.pre_stimulus_period + self.stimulus_period, linestyle='dashed')

ax.axvline(self.pre_stimulus_period + self.stimulus_period + self.delay_period, linestyle='dashed')

ax.legend()

ax = axes[3]

ax.plot(mon['ts'], IA_freqs, label="group A")

ax.plot(mon['ts'], IB_freqs, label="group B")

ax.set_ylabel("Input activity [Hz]")

ax.set_xlim(t_start, self.total_period + 1)

ax.axvline(self.pre_stimulus_period, linestyle='dashed')

ax.axvline(self.pre_stimulus_period + self.stimulus_period, linestyle='dashed')

ax.axvline(self.pre_stimulus_period + self.stimulus_period + self.delay_period, linestyle='dashed')

ax.legend()

ax.set_xlabel("Time [ms]")

plt.show()

The main synaptic projections used in the model are AMPA, GABAA and NMDA. Therefore, we define the synaptic projections we need.

class ExpSyn(bp.Projection):

def __init__(self, pre, post, conn, delay, g_max, tau, E):

super().__init__()

if conn == 'all2all':

comm = bp.dnn.AllToAll(pre.num, post.num, g_max)

elif conn == 'one2one':

comm = bp.dnn.OneToOne(pre.num, g_max)

else:

raise ValueError

syn = bp.dyn.Expon.desc(post.num, tau=tau)

out = bp.dyn.COBA.desc(E=E)

self.proj = bp.dyn.ProjAlignPostMg2(

pre=pre, delay=delay, comm=comm,

syn=syn, out=out, post=post

)

class NMDA(bp.Projection):

def __init__(self, pre, post, conn, delay, g_max):

super().__init__()

if conn == 'all2all':

comm = bp.dnn.AllToAll(pre.num, post.num, g_max)

elif conn == 'one2one':

comm = bp.dnn.OneToOne(pre.num, g_max)

else:

raise ValueError

syn = bp.dyn.NMDA.desc(pre.num, a=0.5, tau_decay=100., tau_rise=2.)

out = bp.dyn.MgBlock(E=0., cc_Mg=1.0)

self.proj = bp.dyn.ProjAlignPreMg2(

pre=pre, delay=delay, syn=syn,

comm=comm, out=out, post=post

)

Because there are too many neuron groups and connections, it will be much clearer if we define a new network class inheriting brainpy.DynSysGroup to accommodate all these neurons and synapses:

class DecisionMakingNet(bp.DynSysGroup):

def __init__(self, scale=1., f=0.15):

super().__init__()

# 网络中各组神经元的数目

num_exc = int(1600 * scale)

num_I, num_A, num_B = int(400 * scale), int(f * num_exc), int(f * num_exc)

num_N = num_exc - num_A - num_B

self.num_A, self.num_B, self.num_N, self.num_I = num_A, num_B, num_N, num_I

poisson_freq = 2400. # Hz

w_pos = 1.7

w_neg = 1. - f * (w_pos - 1.) / (1. - f)

g_ext2E_AMPA = 2.1 # nS

g_ext2I_AMPA = 1.62 # nS

g_E2E_AMPA = 0.05 / scale # nS

g_E2I_AMPA = 0.04 / scale # nS

g_E2E_NMDA = 0.165 / scale # nS

g_E2I_NMDA = 0.13 / scale # nS

g_I2E_GABAa = 1.3 / scale # nS

g_I2I_GABAa = 1.0 / scale # nS

neu_par = dict(V_rest=-70., V_reset=-55., V_th=-50., V_initializer=bp.init.OneInit(-70.))

# E neurons/pyramid neurons

self.A = bp.dyn.LifRef(num_A, tau=20., R=0.04, tau_ref=2., **neu_par)

self.B = bp.dyn.LifRef(num_B, tau=20., R=0.04, tau_ref=2., **neu_par)

self.N = bp.dyn.LifRef(num_N, tau=20., R=0.04, tau_ref=2., **neu_par)

# I neurons/interneurons

self.I = bp.dyn.LifRef(num_I, tau=10., R=0.05, tau_ref=1., **neu_par)

# poisson stimulus # 'freqs' as bm.Variable

self.IA = bp.dyn.PoissonGroup(num_A, freqs=bm.Variable(bm.zeros(1)))

self.IB = bp.dyn.PoissonGroup(num_B, freqs=bm.Variable(bm.zeros(1)))

# noise neurons

self.noise_B = bp.dyn.PoissonGroup(num_B, freqs=poisson_freq)

self.noise_A = bp.dyn.PoissonGroup(num_A, freqs=poisson_freq)

self.noise_N = bp.dyn.PoissonGroup(num_N, freqs=poisson_freq)

self.noise_I = bp.dyn.PoissonGroup(num_I, freqs=poisson_freq)

# define external inputs

self.IA2A = ExpSyn(self.IA, self.A, 'one2one', None, g_ext2E_AMPA, tau=2., E=0.)

self.IB2B = ExpSyn(self.IB, self.B, 'one2one', None, g_ext2E_AMPA, tau=2., E=0.)

# define AMPA projections from N

self.N2B_AMPA = ExpSyn(self.N, self.B, 'all2all', 0.5, g_E2E_AMPA * w_neg, tau=2., E=0.)

self.N2A_AMPA = ExpSyn(self.N, self.A, 'all2all', 0.5, g_E2E_AMPA * w_neg, tau=2., E=0.)

self.N2N_AMPA = ExpSyn(self.N, self.N, 'all2all', 0.5, g_E2E_AMPA, tau=2., E=0.)

self.N2I_AMPA = ExpSyn(self.N, self.I, 'all2all', 0.5, g_E2I_AMPA, tau=2., E=0.)

# define NMDA projections from N

self.N2B_NMDA = NMDA(self.N, self.B, 'all2all', 0.5, g_E2E_NMDA * w_neg)

self.N2A_NMDA = NMDA(self.N, self.A, 'all2all', 0.5, g_E2E_NMDA * w_neg)

self.N2N_NMDA = NMDA(self.N, self.N, 'all2all', 0.5, g_E2E_NMDA)

self.N2I_NMDA = NMDA(self.N, self.I, 'all2all', 0.5, g_E2I_NMDA)

# define AMPA projections from B

self.B2B_AMPA = ExpSyn(self.B, self.B, 'all2all', 0.5, g_E2E_AMPA * w_pos, tau=2., E=0.)

self.B2A_AMPA = ExpSyn(self.B, self.A, 'all2all', 0.5, g_E2E_AMPA * w_neg, tau=2., E=0.)

self.B2N_AMPA = ExpSyn(self.B, self.N, 'all2all', 0.5, g_E2E_AMPA, tau=2., E=0.)

self.B2I_AMPA = ExpSyn(self.B, self.I, 'all2all', 0.5, g_E2I_AMPA, tau=2., E=0.)

# define NMDA projections from B

self.B2B_NMDA = NMDA(self.B, self.B, 'all2all', 0.5, g_E2E_NMDA * w_pos)

self.B2A_NMDA = NMDA(self.B, self.A, 'all2all', 0.5, g_E2E_NMDA * w_neg)

self.B2N_NMDA = NMDA(self.B, self.N, 'all2all', 0.5, g_E2E_NMDA)

self.B2I_NMDA = NMDA(self.B, self.I, 'all2all', 0.5, g_E2I_NMDA)

# define AMPA projections from A

self.A2B_AMPA = ExpSyn(self.A, self.B, 'all2all', 0.5, g_E2E_AMPA * w_neg, tau=2., E=0.)

self.A2A_AMPA = ExpSyn(self.A, self.A, 'all2all', 0.5, g_E2E_AMPA * w_pos, tau=2., E=0.)

self.A2N_AMPA = ExpSyn(self.A, self.N, 'all2all', 0.5, g_E2E_AMPA, tau=2., E=0.)

self.A2I_AMPA = ExpSyn(self.A, self.I, 'all2all', 0.5, g_E2I_AMPA, tau=2., E=0.)

# define NMDA projections from A

self.A2B_NMDA = NMDA(self.A, self.B, 'all2all', 0.5, g_E2E_NMDA * w_neg)

self.A2A_NMDA = NMDA(self.A, self.A, 'all2all', 0.5, g_E2E_NMDA * w_pos)

self.A2N_NMDA = NMDA(self.A, self.N, 'all2all', 0.5, g_E2E_NMDA)

self.A2I_NMDA = NMDA(self.A, self.I, 'all2all', 0.5, g_E2I_NMDA)

# define I->E/I conn

self.I2B = ExpSyn(self.I, self.B, 'all2all', 0.5, g_I2E_GABAa, tau=5., E=-70.)

self.I2A = ExpSyn(self.I, self.A, 'all2all', 0.5, g_I2E_GABAa, tau=5., E=-70.)

self.I2N = ExpSyn(self.I, self.N, 'all2all', 0.5, g_I2E_GABAa, tau=5., E=-70.)

self.I2I = ExpSyn(self.I, self.I, 'all2all', 0.5, g_I2I_GABAa, tau=5., E=-70.)

# define external projections

self.noise2B = ExpSyn(self.noise_B, self.B, 'one2one', None, g_ext2E_AMPA, tau=2., E=0.)

self.noise2A = ExpSyn(self.noise_A, self.A, 'one2one', None, g_ext2E_AMPA, tau=2., E=0.)

self.noise2N = ExpSyn(self.noise_N, self.N, 'one2one', None, g_ext2E_AMPA, tau=2., E=0.)

self.noise2I = ExpSyn(self.noise_I, self.I, 'one2one', None, g_ext2I_AMPA, tau=2., E=0.)

Though the code seems longer than the E-I balanced network, the basic building paradigm is the same: building neuron groups and the connections among them.

Running a simulation#

After building it, the simulation process will be much the same as running a E-I balanced network. First we should wrap the network into a runner:

tool = Tool()

net = DecisionMakingNet()

mu0 = 40.

coherence = 25.6

IA_freqs = tool.generate_freqs(mu0 + mu0 / 100. * coherence)

IB_freqs = tool.generate_freqs(mu0 - mu0 / 100. * coherence)

def give_input():

i = bp.share['i']

net.IA.freqs[0] = IA_freqs[i]

net.IB.freqs[0] = IB_freqs[i]

Then we call the runner to run the simulation:

runner = bp.DSRunner(net, inputs=give_input, monitors=['A.spike', 'B.spike'])

runner.run(tool.total_period)

Finally, we visualize the simulation result by using matplotlib:

tool.visualize_results(runner.mon, IA_freqs, IB_freqs)

For more information about brain dynamic simulation, please refer to Dynamics Simulation in the BDP tutorial.

Simulating a firing rate-based network#

Neural mass model#

A neural mass models is a low-dimensional population model of spiking neural networks. It aims to describe the coarse grained activity of large populations of neurons and synapses. Mathematically, it is a dynamical system of non-linear ODEs. A classical neural mass model is the two-dimensional Wilson–Cowan model. This model tracks the activity of an excitatory population of neurons coupled to an inhibitory population. With the augmentation of such models by more realistic forms of synaptic and network interaction they have proved especially successful in providing fits to neuro-imaging data.

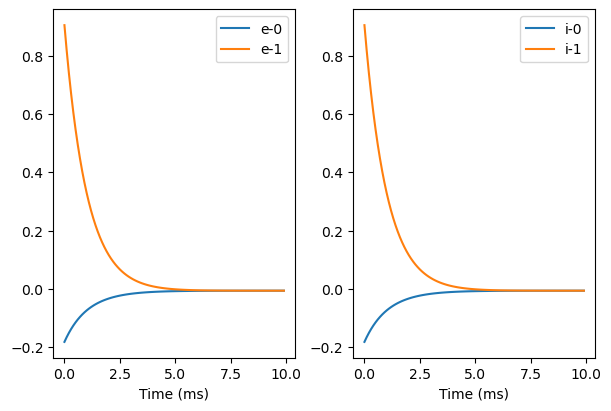

Here, let’s try the Wilson-Cowan model.

wc = bp.dyn.WilsonCowanModel(2,

wEE=16., wIE=15., wEI=12., wII=3.,

E_a=1.5, I_a=1.5, E_theta=3., I_theta=3.,

method='exp_euler_auto',

x_initializer=bm.asarray([-0.2, 1.]),

y_initializer=bm.asarray([0.0, 1.]))

runner = bp.DSRunner(wc, monitors=['x', 'y'], inputs=['input', -0.5])

runner.run(10.)

fig, gs = bp.visualize.get_figure(1, 2, 4, 3)

ax = fig.add_subplot(gs[0, 0])

bp.visualize.line_plot(runner.mon.ts, runner.mon.x, plot_ids=[0, 1], legend='e', ax=ax)

ax = fig.add_subplot(gs[0, 1])

bp.visualize.line_plot(runner.mon.ts, runner.mon.x, plot_ids=[0, 1], legend='i', ax=ax, show=True)

We can see this model at least has two stable states.

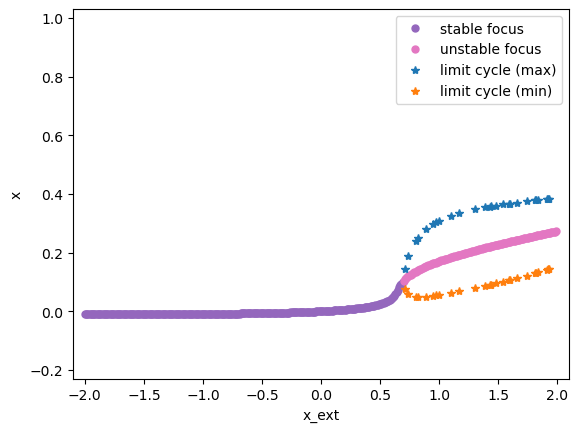

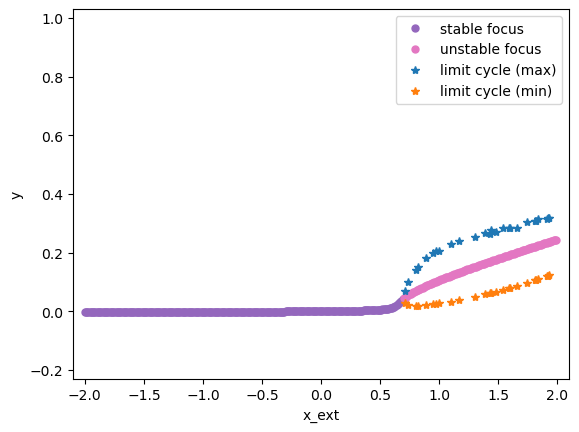

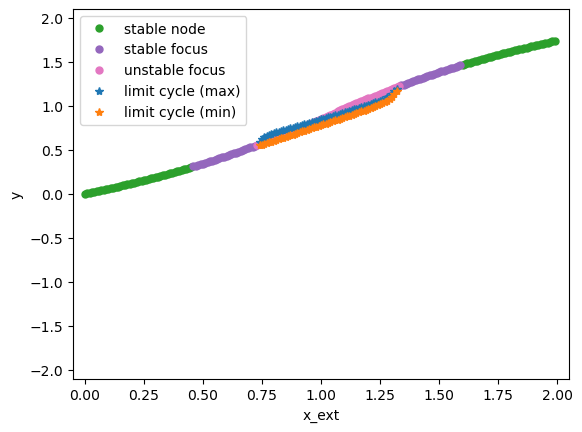

Bifurcation diagram

With the automatic analysis module in BrainPy, we can easily inspect the bifurcation digram of the model. Bifurcation diagrams can give us an overview of how different parameters of the model affect its dynamics (the details of the automatic analysis support of BrainPy please see the introduction in Analyzing a Dynamical Model and tutorials in Dynamics Analysis). In this case, we make x_ext as a bifurcation parameter, and try to see how the system behavior changes with the change of x_ext.

bf = bp.analysis.Bifurcation2D(

wc,

target_vars={'x': [-0.2, 1.], 'y': [-0.2, 1.]},

target_pars={'x_ext': [-2, 2]},

pars_update={'y_ext': 0.},

resolutions={'x_ext': 0.01}

)

bf.plot_bifurcation()

bf.plot_limit_cycle_by_sim(duration=500)

bf.show_figure()

I am making bifurcation analysis ...

I am filtering out fixed point candidates with auxiliary function ...

I am trying to find fixed points by optimization ...

There are 40000 candidates

I am trying to filter out duplicate fixed points ...

Found 400 fixed points.

I am plotting the limit cycle ...

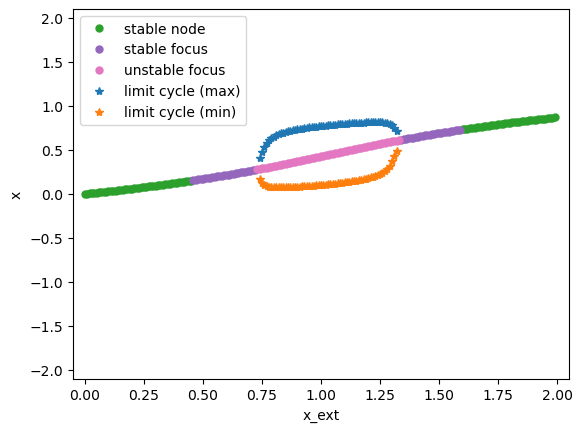

Similarly, simulating and analyzing a rate-based FitzHugh-Nagumo model is also a piece of cake by using BrainPy.

fhn = bp.rates.FHN(1, method='exp_auto')

bf = bp.analysis.Bifurcation2D(

fhn,

target_vars={'x': [-2, 2], 'y': [-2, 2]},

target_pars={'x_ext': [0, 2]},

pars_update={'y_ext': 0.},

resolutions={'x_ext': 0.01}

)

bf.plot_bifurcation()

bf.plot_limit_cycle_by_sim(duration=500)

bf.show_figure()

I am making bifurcation analysis ...

I am filtering out fixed point candidates with auxiliary function ...

I am trying to find fixed points by optimization ...

There are 20000 candidates

I am trying to filter out duplicate fixed points ...

Found 200 fixed points.

I am plotting the limit cycle ...

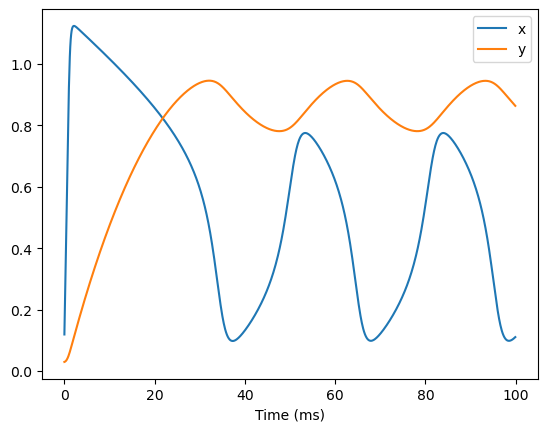

In this model, we find that when the external input x_ext has the value in [0.72, 1.4], the model will generate limit cycles. We can verify this by simulation.

runner = bp.DSRunner(fhn, monitors=['x', 'y'], inputs=['input', 1.0])

runner.run(100.)

bp.visualize.line_plot(runner.mon.ts, runner.mon.x, legend='x')

bp.visualize.line_plot(runner.mon.ts, runner.mon.y, legend='y', show=True)

Whole-brain model#

A rate-based whole-brain model is a network model which consists of coupled brain regions. Each brain region is represented by a neural mass model which is connected to other brain regions according to the underlying network structure of the brain, also known as the connectome. In order to illustrate how to use BrainPy’s support for whole-brain modeling, here we provide a processed data in the following link:

A processed data from ConnectomeDB of the Human Connectome Project (HCP): https://share.weiyun.com/wkPpARKy

Please download the dataset and place it in your favorite PATH.

PATH = './data/hcp.npz'

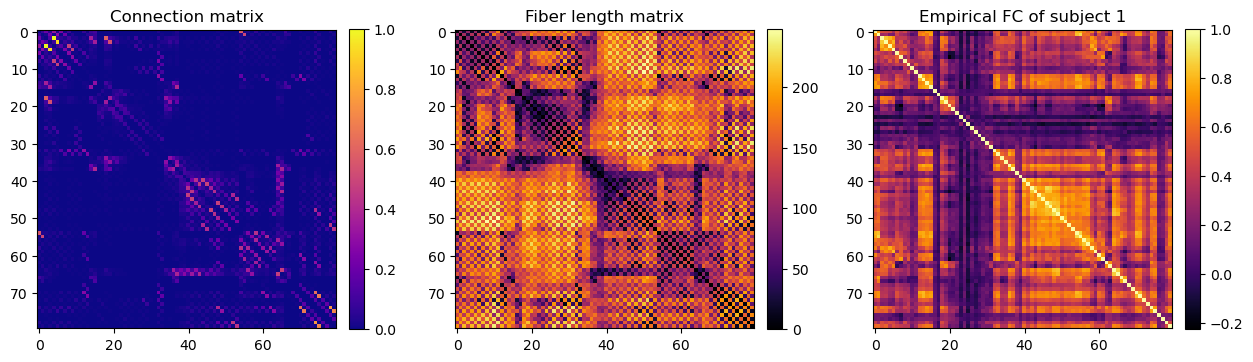

In general, a dataset for whole-brain modeling consists of the following parts:

1. A structural connectivity matrix which captures the synaptic connection strengths between brain areas. It often derived from DTI tractography of the whole brain. The connectome is then typically parcellated in a preferred atlas (for example the AAL2 atlas) and the number of axonal fibers connecting each brain area with every other area is counted. This number serves as an indication of the synaptic coupling strengths between the areas of the brain.

2. A delay matrix which calculated from the average length of the axonal fibers connecting each brain area with another.

3. A set of functional data that can act as a target for model optimization. Resting-state fMRI offers an easy and fairly unbiased way for calibrating whole-brain models. EEG data could be used as well.

Now, let’s load the dataset.

data = bm.load(PATH)

# The structural connectivity matrix

data['Cmat'].shape

(80, 80)

# The fiber length matrix

data['Dmat'].shape

(80, 80)

# The functional data for 7 subjects

data['FCs'].shape

(7, 80, 80)

Let’s have a look what the data looks like.

import matplotlib.pyplot as plt

plt.rcParams['image.cmap'] = 'plasma'

fig, axs = plt.subplots(1, 3, figsize=(15, 5))

fig.subplots_adjust(wspace=0.28)

im = axs[0].imshow(data['Cmat'])

axs[0].set_title("Connection matrix")

fig.colorbar(im, ax=axs[0], fraction=0.046, pad=0.04)

im = axs[1].imshow(data['Dmat'], cmap='inferno')

axs[1].set_title("Fiber length matrix")

fig.colorbar(im, ax=axs[1], fraction=0.046, pad=0.04)

im = axs[2].imshow(data['FCs'][0], cmap='inferno')

axs[2].set_title("Empirical FC of subject 1")

fig.colorbar(im, ax=axs[2], fraction=0.046, pad=0.04)

plt.show()

Let’s first get the delay matrix according to the fiber length matrix, the signal transmission speed between areas, and the numerical integration step dt. Here, we assume the axonal transmission speed is 20 and the simulation time step dt=0.1 ms.

sigal_speed = 20.

# the number of the delay steps

delay_mat = data['Dmat'] / sigal_speed / bm.get_dt()

delay_mat = bm.asarray(delay_mat, dtype=bm.int_)

The connectivity matrix can be directly obtained through the structural connectivity matrix, which times a global coupling strength parameter gc. b

gc = 1.

conn_mat = bm.asarray(data['Cmat'] * gc)

# It is necessary to exclude the self-connections

bm.fill_diagonal(conn_mat, 0)

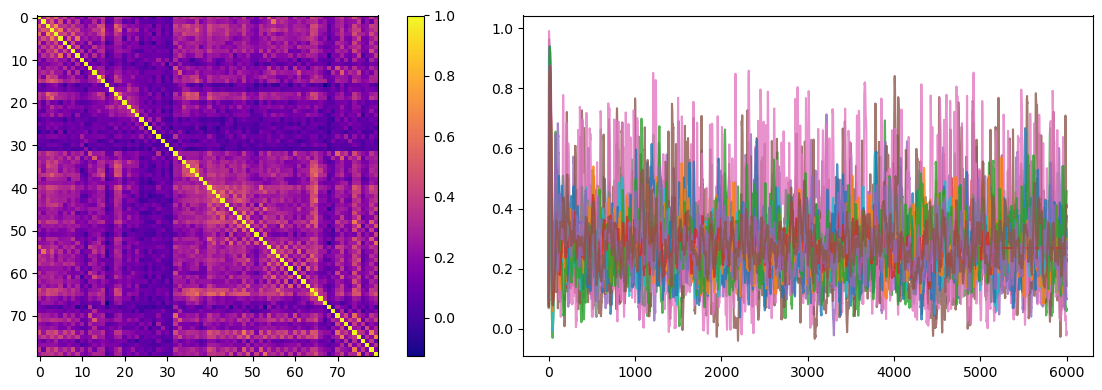

We now are ready to instantiate a whole-brain model with the neural mass model and the dataset the processed before.

class WholeBrainNet(bp.Network):

def __init__(self, Cmat, Dmat):

super(WholeBrainNet, self).__init__()

self.fhn = bp.dyn.FHN(

80,

x_ou_sigma=0.01,

y_ou_sigma=0.01,

method='exp_auto'

)

self.syn = bp.dyn.DiffusiveCoupling(

self.fhn.x,

self.fhn.x,

var_to_output=self.fhn.input,

conn_mat=Cmat,

delay_steps=Dmat.astype(bm.int_),

initial_delay_data=bp.init.Uniform(0, 0.05)

)

net = WholeBrainNet(conn_mat, delay_mat)

runner = bp.DSRunner(net, monitors=['fhn.x'], inputs=['fhn.input', 0.72])

runner.run(6e3)

The simulated results can be used to estimate the functional correlation matrix.

fig, axs = plt.subplots(1, 2, figsize=(12, 4))

fc = bp.measure.functional_connectivity(runner.mon['fhn.x'])

ax = axs[0].imshow(fc)

plt.colorbar(ax, ax=axs[0])

axs[1].plot(runner.mon.ts, runner.mon['fhn.x'][:, ::5], alpha=0.8)

plt.tight_layout()

plt.show()

We can compute the element-wise Pearson correlation of the functional connectivity matrices of the simulated data to the empirical data to estimate how well the model captures the inter-areal functional correlations found in empirical resting-state recordings.

scores = [bp.measure.matrix_correlation(fc, fcemp)

for fcemp in data['FCs']]

print("Correlation per subject:", [f"{s:.2}" for s in scores])

print("Mean FC/FC correlation: {:.2f}".format(bm.mean(bm.asarray(scores))))

Correlation per subject: ['0.63', '0.5', '0.58', '0.51', '0.56', '0.49', '0.47']

Mean FC/FC correlation: 0.53

References#

van Vreeswijk, C., & Sompolinsky, H. (1996). Chaos in neuronal networks with balanced excitatory and inhibitory activity. Science (New York, N.Y.), 274(5293), 1724–1726. https://doi.org/10.1126/science.274.5293.1724

Wang X. J. (2002). Probabilistic decision making by slow reverberation in cortical circuits. Neuron, 36(5), 955–968. https://doi.org/10.1016/s0896-6273(02)01092-9