Using Built-in Models#

BrainPy enables modularity programming and easy model debugging. To build a complex brain dynamics model, you just need to group its building blocks. In this section, we are going to talk about what building blocks we provide, and how to use these building blocks.

import numpy as np

import brainpy as bp

import brainpy.math as bm

bm.set_platform('cpu')

bp.__version__

'2.4.6'

Initializing a neuron model#

All neuron models implemented in brainpy are subclasses of brainpy.dyn.NeuDyn. The initialization of a neuron model just needs to provide the geometry size of neurons in a population group.

hh = bp.dyn.HH(size=1) # only 1 neuron

hh = bp.dyn.HH(size=10) # 10 neurons in a group

hh = bp.dyn.HH(size=(10, 10), keep_size=True) # a grid of (10, 10) neurons in a group

hh = bp.dyn.HH(size=(5, 4, 2), keep_size=True) # a column of (5, 4, 2) neurons in a group

Generally speaking, there are two types of arguments can be set by users:

parameters: the model parameters, like

gNarefers to the maximum conductance of sodium channel in thebrainpy.neurons.HHmodel.variables: the model variables, like

Vrefers to the membrane potential of a neuron model.

In default, model parameters are homogeneous, which are just scalar values.

hh = bp.dyn.HH(5) # there are five neurons in this group

hh.gNa

120.0

However, neuron models support heterogeneous parameters when performing computations in a neuron group. One can initialize heterogeneous parameters by several ways.

1. Array

Users can directly provide an array as the parameter.

hh = bp.dyn.HH(5, gNa=bm.random.uniform(110, 130, size=5))

hh.gNa

Array(value=Array([127.87629 , 117.25309 , 113.342834, 128.16406 , 122.6783 ], dtype=float32), dtype=float32)

2. Initializer

BrainPy provides wonderful supports on initializations. One can provide an initializer to the parameter to instruct the model initialize heterogeneous parameters.

hh = bp.dyn.HH(5, ENa=bp.init.OneInit(50.))

hh.ENa

Array(value=Array([50., 50., 50., 50., 50.]), dtype=float32)

3. Callable function

You can also directly provide a callable function which receive a shape argument.

hh = bp.dyn.HH(5, ENa=lambda shape: bm.random.uniform(40, 60, shape))

hh.ENa

Array(value=Array([40.24787 , 48.84902 , 54.918022, 57.736324, 57.20079 ]), dtype=float32)

Here, let’s see how the heterogeneous parameters influence our model simulation.

# we create 3 neurons in a group. Each neuron has a unique "gNa"

model = bp.dyn.HH(3, gNa=bp.init.Uniform(min_val=100, max_val=140))

inputs = np.ones(int(100./ bm.dt)) * 6. # 100 ms

runner = bp.DSRunner(model, monitors=['V'])

runner.run(inputs=inputs)

bp.visualize.line_plot(runner.mon.ts, runner.mon.V, plot_ids=[0, 1, 2], show=True)

Similarly, the setting of the initial values of a variable can also be realized through the above three ways: Array, Initializer, and Callable function. For example,

hh = bp.dyn.HH(

3,

V_initializer=bp.init.Uniform(-80., -60.), # Initializer

m_initializer=lambda shape: bm.random.random(shape), # function

h_initializer=bm.random.random(3), # Array

)

print('V: ', hh.V)

print('m: ', hh.m)

print('h: ', hh.h)

V: Variable(value=Array([-62.370342, -75.48245 , -72.79056 ]), dtype=float32)

m: Variable(value=Array([0.39839578, 0.22285819, 0.6400248 ]), dtype=float32)

h: Variable(value=Array([0.75309145, 0.08168364, 0.24722028]), dtype=float32)

Initializing a synapse model#

Initializing a synapse model needs to provide its pre-synaptic group (pre), post-synaptic group (post) and the connection method between them (conn). The below is an example to create an Exponential synapse model:

class Exponential(bp.Projection):

def __init__(self, pre, post, prob, weight, delay=None, tau=5., E=0.):

super().__init__()

self.proj = bp.dyn.ProjAlignPostMg2(

pre=pre,

delay=delay,

comm=bp.dnn.CSRLinear(bp.conn.FixedProb(prob, pre=pre.num, post=post.num), weight=weight),

syn=bp.dyn.Expon.desc(size=post.num, tau=tau, sharding=[bm.sharding.NEU_AXIS]),

out=bp.dyn.COBA.desc(E=E),

post=post

)

neu = bp.dyn.Lif(10)

# here we create a synaptic projection within a population

syn = Exponential(neu, neu, 0.02, 0.1)

BrainPy’s build-in synapse models support heterogeneous synaptic weights by using Array, Initializer and Callable function. For example,

syn = Exponential(neu, neu, 0.5, weight=bp.init.Uniform(min_val=0.1, max_val=1.))

syn.proj.comm.weight

Array(value=Array([0.8229989 , 0.1127008 , 0.21375097, 0.28008685, 0.8893519 ,

0.59226 , 0.37199625, 0.9018673 , 0.45918232, 0.41741067,

0.19417804, 0.10889743, 0.11677239, 0.34665036, 0.99347353,

0.86979085, 0.8911144 , 0.78797114, 0.34128788, 0.855673 ,

0.29981846, 0.24433278, 0.39912638, 0.8952131 , 0.6897643 ,

0.28788885, 0.68920213, 0.6843358 , 0.37883654, 0.70628715,

0.5746923 , 0.10819844, 0.4299777 , 0.2163685 , 0.7592538 ,

0.95128614, 0.2900757 , 0.4627868 , 0.6950972 , 0.83101374,

0.73066264, 0.80973125, 0.5567733 , 0.8197859 , 0.12235235,

0.34319997, 0.4163569 , 0.6115145 , 0.38117126, 0.95603216], dtype=float32),

dtype=float32)

However, in BrainPy, the built-in synapse models only support homogenous synaptic parameters, like the time constant \(\tau\). Users can customize their synaptic models when they want heterogeneous synaptic parameters.

Similar, the synaptic variables can be initialized heterogeneously by using Array, Initializer, and Callable functions.

Changing model parameters during simulation#

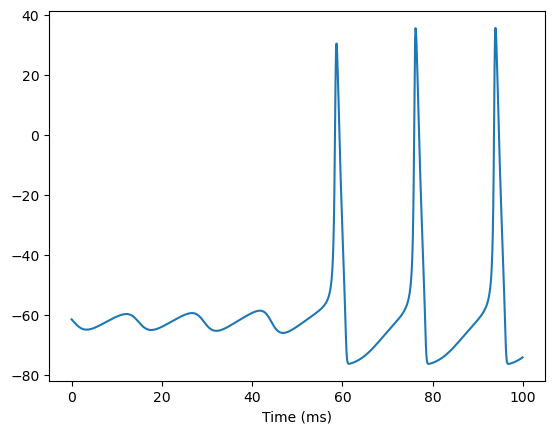

In BrainPy, all the dynamically changed variables (no matter it is changed inside or outside the jitted function) should be marked as brainpy.math.Variable. BrainPy’s built-in models also support modifying model parameters during simulation.

For example, if you want to fix the gNa in the first 100 ms simulation, and then try to decrease its value in the following simulations. In this case, we can provide the gNa as an instance of brainpy.math.Variable when initializing the model.

hh = bp.dyn.HH(5, gNa=bm.Variable(bm.asarray([120.])))

runner = bp.DSRunner(hh, monitors=['V'])

# the first running

inputs = np.ones(int(100./ bm.dt)) * 6. # 100 ms

runner.run(inputs=inputs)

bp.visualize.line_plot(runner.mon.ts, runner.mon.V, show=True)

# change the gNa first

hh.gNa[:] = 50.

# the second running

runner.run(inputs=inputs)

bp.visualize.line_plot(runner.mon.ts, runner.mon.V, show=True)

Examples of using built-in models#

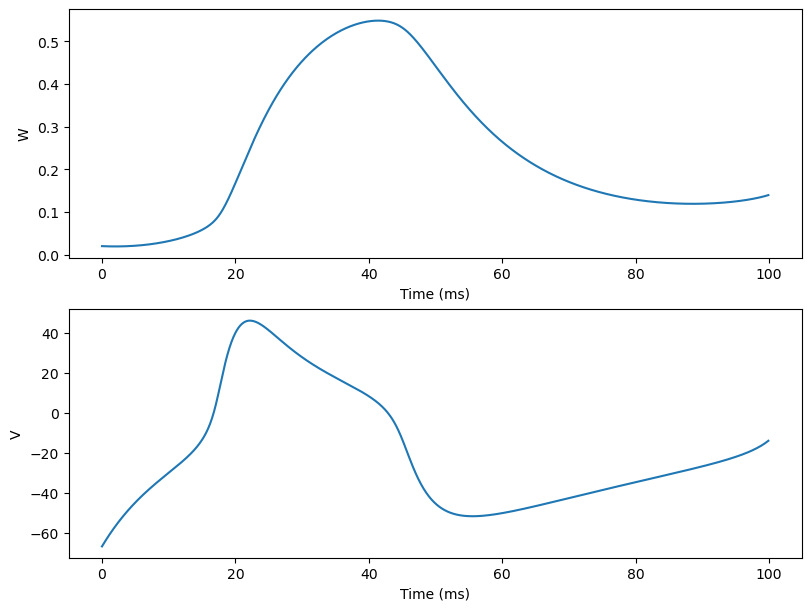

Here we show users how to simulate a famous neuron models: The Morris-Lecar neuron model, which is a two-dimensional “reduced” excitation model applicable to systems having two non-inactivating voltage-sensitive conductances.

group = bp.dyn.MorrisLecar(1)

Then users can utilize various tools provided by BrainPy to easily simulate the Morris-Lecar neuron model. Here we are not going to dive into details so please read the corresponding tutorials if you want to learn more.

runner = bp.DSRunner(group, monitors=['V', 'W'])

inputs = np.ones(int(100./ bm.dt)) * 100. # 100 ms

runner.run(inputs=inputs)

fig, gs = bp.visualize.get_figure(2, 1, 3, 8)

fig.add_subplot(gs[0, 0])

bp.visualize.line_plot(runner.mon.ts, runner.mon.W, ylabel='W')

fig.add_subplot(gs[1, 0])

bp.visualize.line_plot(runner.mon.ts, runner.mon.V, ylabel='V', show=True)

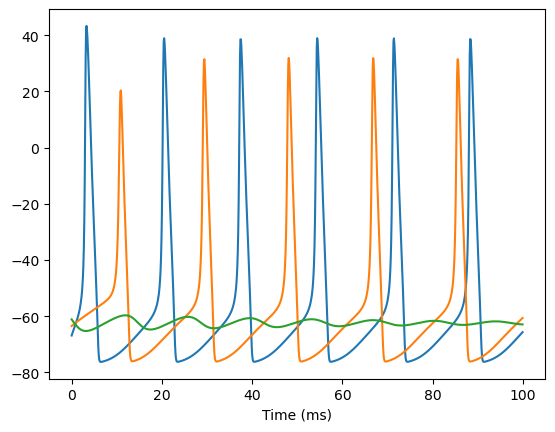

Next we will also give users an intuitive understanding about building a network composed of different neurons and synapses model. Users can simply initialize these models as below and pass into brainpy.DynSysGroup.

class AMPA(bp.Projection):

def __init__(self, pre, post, delay, g_max, E=0.):

super().__init__()

self.proj = bp.dyn.ProjAlignPreMg2(

pre=pre,

delay=delay,

syn=bp.dyn.AMPA.desc(pre.num, alpha=0.98, beta=0.18, T=0.5, T_dur=0.5),

comm=bp.dnn.AllToAll(pre.num, post.num, g_max),

out=bp.dyn.COBA(E=E),

post=post,

)

neu1 = bp.neurons.HH(1)

neu2 = bp.neurons.HH(1)

syn1 = AMPA(neu1, neu2, None, 1.)

net = bp.DynSysGroup(pre=neu1, syn=syn1, post=neu2)

By selecting proper runner, users can simulate the network efficiently and plot the simulation results.

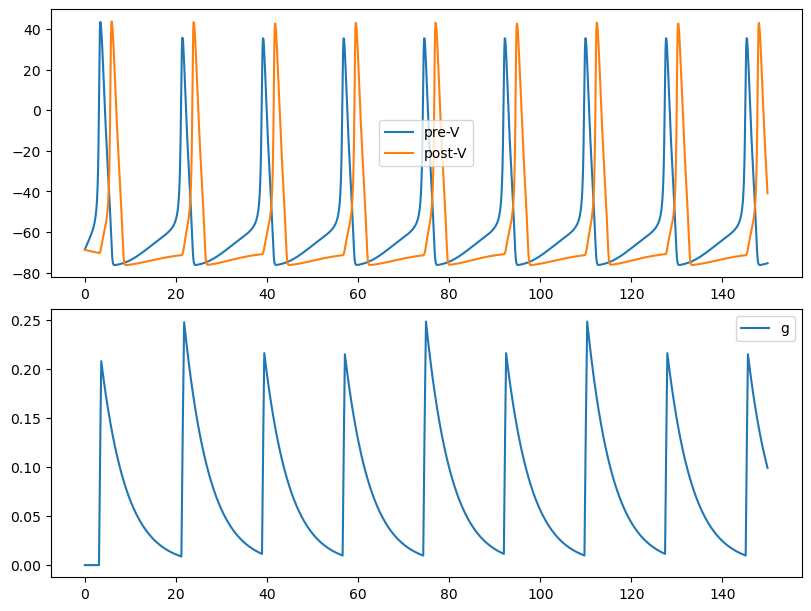

runner = bp.DSRunner(net,

inputs=(neu1.input, 6.),

monitors={'pre.V': neu1.V, 'post.V': neu2.V, 'syn.g': syn1.proj.refs['syn'].g})

runner.run(150.)

import matplotlib.pyplot as plt

fig, gs = bp.visualize.get_figure(2, 1, 3, 8)

fig.add_subplot(gs[0, 0])

plt.plot(runner.mon.ts, runner.mon['pre.V'], label='pre-V')

plt.plot(runner.mon.ts, runner.mon['post.V'], label='post-V')

plt.legend()

fig.add_subplot(gs[1, 0])

plt.plot(runner.mon.ts, runner.mon['syn.g'], label='g')

plt.legend()

plt.show()